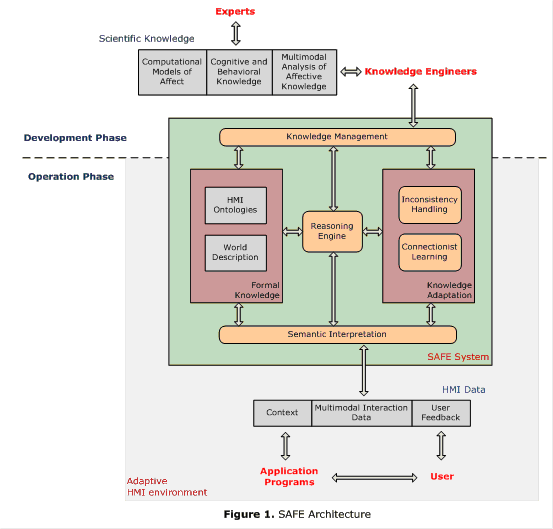

This networking session aims at identifying future directions in affective and person-centred computing, by amalgamating related research findings within a unified knowledge framework. Such a framework would incorporate affect-aware, cognitive and behavioural models with results and knowledge on multimodal affect and context analysis, while being able to represent and handle uncertain and scalable affective knowledge and adapt it to specific user behaviours, contexts of interaction and environment changes.

Core participants to this session are world-leading researchers in the fields of recognition of emotion and affect, knowledge technologies and neural network and neural-symbolic learning and analysis; related developments will be based on the large experience gained by participation of partners to numerous EC projects, as well as state-of-the art standardisation (in the W3C framework) activities in the field of Knowledge Technologies.

Affective computing has been a topic of great interest during the last few years, researched across various disciplines, including perception, interpretation, cognition and interaction/expression. In this framework, many EU projects and networks have been funded to investigate different aspects of affective interaction, such as theories and computational models of emotion processes, databases, signal analysis and recognition, generation of embodied conversational agents (e.g. Ermis, Safira, Humaine, Semaine, etc.) Research results mostly refer to the analysis of affective and emotional theories and related computational models, extraction of affective cues from single or multi-sensorial inputs, modelling of affective states, analysis and recognition of user states based on extracted cues, generation of synthetic characters that communicate different expressive states and attitudes, generation of databases with affective interactions for training and testing the analysis and synthesis techniques and inclusion of the above in interactive environments. These activities have produced systems that model and analyse single or multi-modal affective cues, utilizing statistical information and rules for this purpose, data sets and environments used to perform user state detection, and interactive Embodied Conversational Agents.

Despite these achievements, the field remains considerably fragmented and case dependent, failing to address emerging requirements such as humanlike interactions, less constrained-environments and adaptive artificial systems. Some common frameworks are available, such as discrete, dimensional, component-based emotion models, models of cognitive and goal-based interaction, annotation models, such as FACS or MPEG-4 AFX for face and body motion analysis and synthesis, feature sets from speech and biosignal analysis, multimodal integrators via feature or decision fusion. However, most individual approaches fail to address issues like synchronisation or contradiction, co-existence, which produce great difficulty in interweaving the above findings. In addition, fusion of different signals and cues requires inferring on e.g. contradictory or ambiguous emotional cues and assuring that the interaction is appropriate in terms of user experience. Consequently, there is a lack of a common knowledge framework which will integrate and consistently represent all taxonomies, rules and correlations coming out of the above theories, models and results. The need for such a unified knowledge framework can be verified by the fact that performance of multi-modal input fusion is far from satisfactory across varying environments and the lack of complete interaction loops where analysis feeds synthesis with information expressed by the latter and then reused further through user´s affective feedback by the former one, while inclusion of cognitive components and the context information makes the situation more complex and systems very hard to benchmark.