IVA

IVA SymCity: Feature Selection by Symmetry

The bag-of-words (BoW) representation on local features and descriptors, along with geometry verification, has been very successful at particular object retrieval. At large scale, the current bottleneck appears to be the memory footprint of the index. So far, feature selection is probably the only practical alternative. An increasingly popular idea is to select features from multiple views. In practice however, most images are unique, in the sense that they depict a unique view of an object or scene in the dataset and there is nothing to compare to.

We consider exactly such unique images in this work, using the very same idea: feature selection by self-similarity. We develop two self-matching alternatives and apply them between each image and either itself or its reflection, where tentative feature correspondences are found in the descriptor space, without quantization. In effect, we detect repeating patterns or local symmetries and index only the participating features. The underlying motivation is that features repeating within a single image are quite likely to repeat across different views as well, hence are good candidates for a more compact image representation that does not sacrifice performance.

The self-matching methods are inspired by state-of-the-art spatial matching methods: fast spatial matching (FSM) introduced by Philbin etal., and Hough pyramid matching (HPM), introduced by Tolias and Avrithis. The corresponding proposed adapted methods for self-matching are SSM and HPSM respectively.

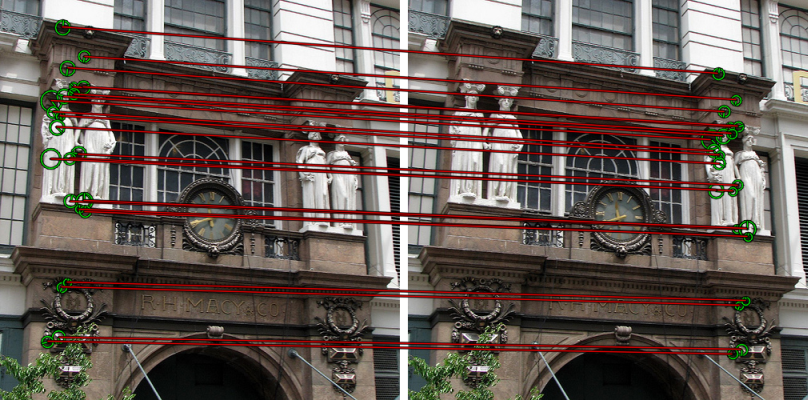

Left: sample group of inliers found by SSM on original image, capturing a repeating pattern. Right: sample group found by SSM between original and flipped images, capturing a symmetry.

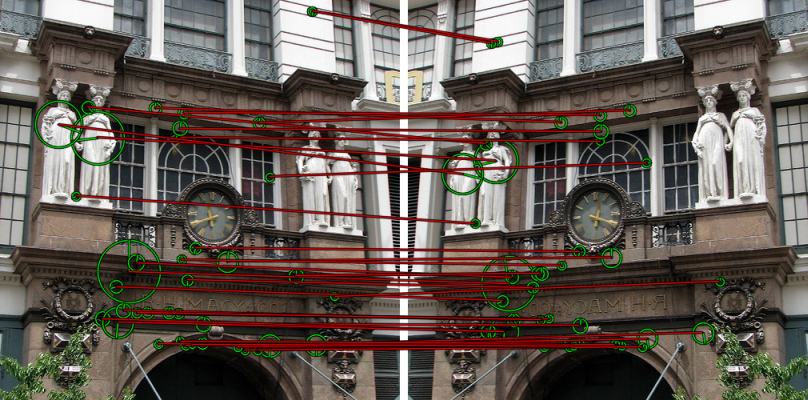

Flipped matching with HPSM at $L=5$ levels. Left: correspondences in a single bin at level $0$, revealing a symmetric feature group. Right: all tentative correspondences, with red (yellow) being the strongest (weakest).

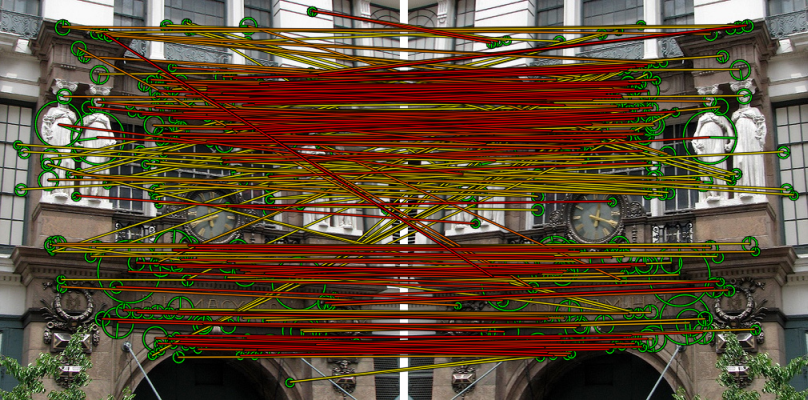

Initial (left) and selected (right) features by SSM. Original, flipped and back-projected selections shown in red, green and blue respectively.

We use two datasets, namely the new SymCity dataset and World Cities dataset. SymCity is a dataset of $953$ annotated images from Flickr, split in $299$ small groups depicting urban scenes. From each group, a single image is inserted in the database, while all other $654$ images are only used as queries. For each query image we then wish to retrieve only a unique database image, and the precision measurement actually depends only on its ranked position. The distractor set of World Cities consists of $2$M images from different cities; we use the first one million as distractors in our experiments.

We also compare to three alternative, simple selection criteria for single images, where in particular a fixed number of features $n$ is selected, having the highest detector strength, largest feature scale $\sigma$ (as done by Turcot and Lowe), or uniformly at random among the full feature set. Detector strength is a measurement that is used and typically available by each feature detector, or may be obtained by successive thresholding otherwise. It is readily available for SURF.

Mean average precision comparison versus number of distractors, with $\tau_\beta=0.4$ for HPSM and a fixed number of features $n=300$ for the remaining selection criteria. |

Mean average precision comparison against memory ratio for varying $\tau_\beta,n$ in the presence of $1$M distractors.. |

Conferences

[ Abstract ] [ Bibtex ] [ PDF ]

PhD Thesis

[ Abstract ] [ Bibtex ] [ PDF ]